Key Points:

- Human mistakes result from lack of real-time data and cognitive bias. Mistakes by people are a main cause of data breaches and project failures that negatively affect lives.

- Reduce bias through collaboration and communication among all levels. Break down silos and decrease technical jargon. Empower and encourage all stakeholders with bias awareness, two-way feedback, learning opportunities, and big-picture thinking.

- Intelligent automation reduces mistakes from bias, apathy, and stress by eliminating tedious manual processing and providing up-to-date data. More time can then be spent on informed problem-solving and communicating on solutions.

“Hackers gonna hack” is a play on the catchphrase “haters gonna hate” and stemmed from a response by the former German Federal Minister of the Interior in 2010 when local television news reported that a newly released electronic German identity card was easily hacked.

The Federal Minister’s statement was, “Some hackers will always be able to hack something, but, uh, the reliability and security of the new identity card are beyond dispute,” which suggested that adequately testing the security of a solution that would contain the nation’s personal data was not taken seriously.

In today’s world, “hackers gonna hack” isn’t an excuse to avoid properly assessing, prioritizing, and mitigating risks. In fact, The White House made this clear in May 2021 with Executive Order 14028 on Improving the Nation’s Cybersecurity.

People mistakes are a primary cause of data breach, ransomware, supply chain risk, third-party vulnerabilities, and insufficient investment in innovation. Understanding how to counteract these mistakes is a serious issue due to ransomed healthcare systems, fraud, stolen intellectual property, and critical infrastructure breach breakdowns like Colonial Pipeline experienced.

Mistakes result from lack of data on current risks, social pressures, and cognitive bias (mental rules). However, mistakes can be difficult to avoid even when you are aware of bias. People attach to mental beliefs about acceptable behavior based on their “identity and roles.” For example, a non-technical user might think security is the job of the IT department and ignore training on risks.

The Risks of Mental Bias and Social Conformity

On August 29, the space shuttle Artemis I launch was scrubbed and postponed until possibly October due to rocket engine issues. Although this is a test flight and no crew will be on board, plans to send people to the moon and Mars is a reminder that risk assessment must include mitigating social pressures and mental bias. In 1986, bias and social pressure contributed to the tragic loss of the seven-member crew of the Challenger Space Shuttle when organizational expectations resulted in groupthink, and a known cold-temperature issue with the O-ring was ignored on that frosty January launch morning.

The bias of groupthink, per the New York Times article above on the Challenger, was “coined in 1972 by Irving L. Janis, a Yale psychologist and a pioneer in the study of social dynamics. He called groupthink ‘a mode of thinking that people engage in when they are deeply involved in a cohesive in-group, when the members’ strivings for unanimity override their motivation to realistically appraise alternative courses of action.’

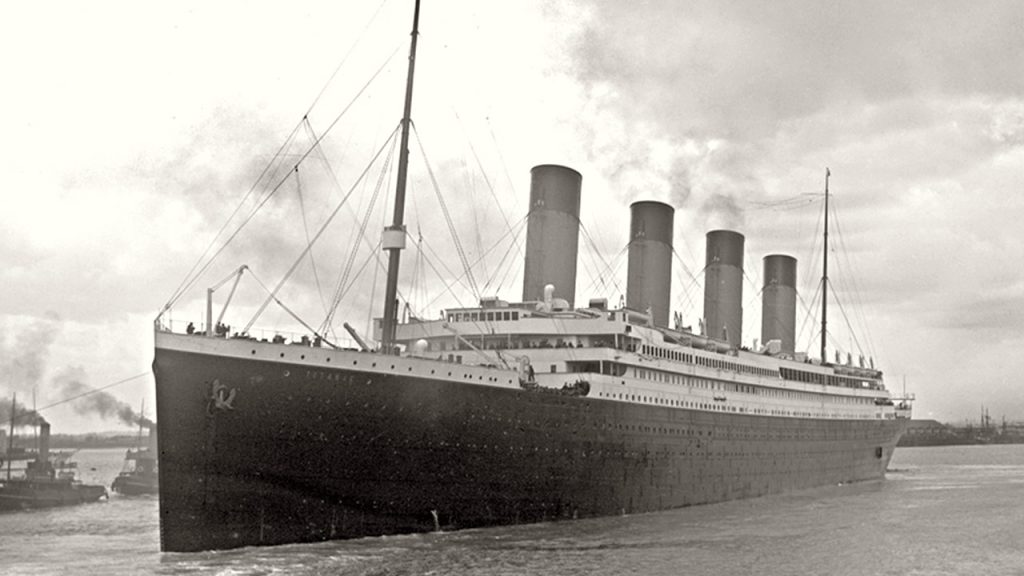

Groupthink also occurred when the Titanic sank in 1912, killing more than 1,500 people. David Owen, a Partner and Cyber Risk leader at Deloitte, wrote an article, “How ransomware is exposing Titanic leadership assumptions around critical infrastructure,” where he states that with the Titanic it’s “generally accepted that over-confident decisions and assumptions were key contributors – such as whether icebergs are actually a big threat” and a “race-for-glory meant bigger and faster ships, more northerly routes, and pressure on captains to sail fast.”

Owen notes that “today’s ransomware scourge has a number of similarities” and that “many organizations often have been over-confident in assumptions that:

- Past risk management decisions over existing assets are adequate for the rising threat

- The controls they expect to be in place are actually effective”

He suggests reframing thinking, stressing that organizations must “develop a high-level understanding of the organization’s processes, threats, key risks, control maturity, suppliers, and people.

This will help to orientate on where to focus and provide an overall sense of the risk and any obvious gaps (e.g. does the organization understand its own assets?)”

Understanding Cognitive Bias

Seeking a high-level understanding of people and vulnerabilities to your assets should mean that you take bias seriously as it too often is the real “decision maker” behind human mistakes – including clicks on phishing links, mistakes from overworked security teams, or insufficient funding by the C-suite for innovative solutions.

The National Institute of Standards and Technology (NIST) Report 8286 – Integrating Cybersecurity and Enterprise Risk Management focuses on communication, mitigating bias, and properly identifying an organization’s assets and the risks to those assets.

You can review key concerns and goals outlined in the NIST guide in our article, “Cyber resilience requires managing human risks and leveraging innovation and automation.”

Cognitive bias became more clearly understood as a risk with the development of Behavioral Economics. How we make decisions is explained in terms of biases and heuristics by Nobel prize winner Daniel Kahneman in his revolutionary book, Thinking, Fast and Slow.

Serious mistakes result from two factors:

- Relying on unexamined “accepted wisdom,” past behavior, and the status-quo due to inaccurate, limited mental rules when facing uncertainty, new information, familiar or complex choices, social pressure, and/or or time constraints.

- Self-limiting beliefs about social identity and power hierarchies often restrict voicing of opinions, two-way feedback, and creativity.

Following these false “old” rules can result in negative outcomes when applied to current situations where they don’t belong. However, social pressures, power hierarchies, and investment in the status-quo often reinforce continued mistakes. Something serious often ends up happening before the public, industry, and regulatory bodies realize past rules don’t work anymore.

Data privacy is now receiving a larger regulatory focus because a person’s personal data – including data on executives at your company – are actively being used by cybercriminals to profile and target them. Threat actors know users are biased to click on familiar links because most people are generally unware of sophisticated phishing techniques and can be tricked when cybercriminals spoof legitimate people and companies.

Threat actors are innovative and have come up with a plethora of techniques including, tactics circumventing multifactor authentication, fake Microsoft direct mail with malware USBs, SMS phishing (smishing) imitating brands like Amazon, voice phishing (vishing), and advanced deepfakes that eerily imitate politicians, CEOs, and celebrities.

Executives are also targeted with phishing, whaling, and deepfakes. In June, mayors of several European capitals were duped into holding video calls with a deepfake of their counterpart in Kyiv.

Bias is basically a form of information blindness due to inadequate methods of information sharing and collaboration, as well as acceptance of mental rules stemming from cultural, educational, and social pressures, a kind of groupthink.

An insightful example of overcoming organizational bias was shown in the movie Moneyball, based on the 2003 nonfiction book by renowned author Michael Lewis. Billy Beane, the general manager of the Oakland Athletics baseball team, was able to recognize bias in how players were drafted due to his own negative emotional experience as a young pro baseball player.

As GM, Beane stood up to bias and proactively sought out unusual collaborations and embraced using data technology to identify talent other teams overlooked. By setting aside “accepted wisdom” and giving power to an analyst, a new way of decision-making was born that improved the performance of the team within their budget constraints.

Collaboration & Validation Reduce Bias

How ideas – including cybersecurity concepts – are labeled, presented, and perceived also results in communication problems and creates barriers to collaboration. Too much jargon causes people to tune out because it seems too familiar, or conversely, it seems too complex and outside their influence. This applies to any type of learning.

Two stories from CSO address how overly relying on using buzzwords is detrimental and contributes to bias and miscommunication about cyber risks and solutions. Instead, you should personalize how you explain things using words and examples people relate to:

- 9 Types of Computer Virus and How They Do Their Dirty Work – “Whatever taxonomy we use to explain cyberthreats, it shouldn’t be overly rigid, but should instead make it easier to communicate important information about those risks. And that means tailoring your language for your audience, according to Ori Arbel, CTO of CYREBRO, a security services provider.”

- Sorting Zero-Trust Hype from Reality – It seems as if everyone is playing “buzzword bingo” when it comes to Zero Trust and its implementation … Egress Vice President of Product Management Steve Malone observes, “It’s not something you can buy from a single vendor. Zero Trust is a security methodology … The importance of people, processes and technology can’t be over-emphasized.”

The importance of collaboration and focusing the team on big-picture goals is illustrated in the article, “Building a Strong SOC Starts with People,“ where author Neil Weitzel SOC Manager at ThreatX said, “I manage a security operations center (SOC) in the midst of the Great Resignation and a massive cybersecurity skills gap. During this time, I’ve learned a few surprising things about how to recruit and maintain a cohesive SOC team.”

Weitzel’s tips include:

- Give and Receive Regular Feedback – He states, “I have an open-door policy with my team, which allows for a consistent feedback loop. If I need to be doing more for my team, I expect them to tell me where I can improve; on the flip side, hearing if something is going well helps me better calibrate my leadership style to my team.”

- Rotate Tasks and Responsibilities – “Within my team, I have everyone rotate between managing alerts, self-paced training, and project work … Additionally, finding ways to automate regular tasks will reduce the stress and burden placed on the team so they can focus on more strategic work.”

- Promote Interactions Throughout the Company – “It can be easy to get lost looking at each tree in the SOC, when you should instead be focusing on the forest … I encourage my team to complete a quarterly “Do Good” project, which focuses on the needs of the company and the larger security community.”

Weitzel emphasized that supporting ongoing learning and projects, as well as automation, enables his team to be less stressed, feel valued, and focus on strategic activities. Intelligent automation is available as a tool to automate high-volume data, reduce tedious manual processing, and provide a big-picture view.

A consistent feedback loop with an open-door policy is one of Weitzel’s tips. One of the findings from behavioral economics is that it’s easier to recognize other people’s mistakes than your own. This is why red-teaming, or purposefully encouraging alternative viewpoints, can prevent bias and is highly recommended for decision-making.

Inclusion and giving power to marginalized voices is a hot topic in organizations and our educational system, and is sometimes referred to as psychological safety and growth mindset. Inclusion should mean you empower and gain feedback from everyone and look out for instances of bias due to race, gender, age, educational background, and even temperament. A book illustrating some of these concepts is New York Times bestseller, Quiet: The Power of Introverts in a World That Can’t Stop Talking by Susan Cain.

Psychological safety is a method of reducing hierarchical power dynamics, as well as social, cultural, and temperament biases. It leads to greater productivity, harmony, and innovation. Growth mindset (as opposed to fixed mindset) is known to actually change the brain itself – called neuroplasticity – by viewing the world as continually changing and evolving, and realizing that the self also can continually assess, change, and evolve.

The insightful book Smarter Faster Better: The Transformative Power of Real Productivity by Pulitzer Prize–winning journalist Charles Duhigg discusses topics like psychological safety and growth mindset in detail with real-life stories from “CEOs, educational reformers, four-star generals, FBI agents, airplane pilots, and Broadway songwriters.”

Duhigg quoted Ed Catmull, the former president of Disney Animation, who told him that “Most people are too narrow in how they think about creativity. So we spend a huge amount of time pushing people to go deeper, to look further inside themselves … We all carry the creative process inside us; we just need to be pushed to use it sometimes.”

Many cyber leaders are realizing they need to look to other avenues to recruit cybersecurity staff and are focusing more on curiosity, passion, and a willingness to learn rather than whether or not someone has a college degree and on-the-job security experience.

The idea of bias limiting us really isn’t new. The ancient philosopher Plato is known for his Allegory of the Cave story that symbolically references unexamined human perception, describing it as if we were mental “prisoners” chained to inaccurate mental constructs and unable to see reality clearly. We often miss the forest and focus on the tree.

As quantum computing advances processing power, it’s also becoming more well-known that on a quantum physics level, “energy” seems to exist in a sort of free, super power (superposition) state and only takes on a particular “value” due to being observed. Energy appears to leave its free state through this limiting process of observation – perception is relative to the observer. So even on a quantum physics level, each being appears to have a point of view that is shaped or limited by personal perceptions. Quantum cognition is actually a new area of research.

Our personal “world view” may be perceived through a complex web of biased ideas, but we also know we are a part of this interactive collective that provides valuable feedback. We should not shrug our shoulders, give up, and say “bias gonna bias.”

From the SOC Manager’s story, Billy Beane’s story, and many other stories, we can see that everyone has an intuitive, deep desire to break down barriers, collaborate, and contribute their natural talents to create a more unlimited future for all. That desire may just be stifled by cultural and workplace norms and mental bias from past experiences.

Embracing Inclusion, Innovation & Automation

The CIO of the Department of Defense, John Sherman, recently discussed implementing Zero Trust at scale, saying that cybersecurity is his top priority. He emphasized the importance of people, inclusion, communication, collaboration, and innovation. He said the new strategy has to be a “whole-of-nation approach, this is the space race for this generation” and “we need to draw on every bit of talent.”

With the Artemis I space shuttle being assessed for a future launch, we should remember the bravery of three African-American women who were a vital part of NASA’s early days – Katherine Johnson, Mary Jackson, and Dorothy Vaughan. These talented human beings were the courageous land “astronauts” who had to venture into hostile terrain against bias so that they could contribute and share their extraordinary talents. The movie Hidden Figures shows the incredible creativity and resolve they brought to work each day.

We all should spend more time exploring the space in our head releasing bias and encouraging inclusion and innovation at all levels to make sure we empower ourselves and those around us to “shoot for the moon.”

Employees are just humans with the usual biases – often without advanced IT knowledge – trying to use information to make decisions with an avalanche of passwords. Without clear data governance and user-friendly tools to manage petabytes of data and piles of passwords, years of accumulated and overshared, duplicated, misfiled, and unencrypted business intelligence, password files, and PII are at risk and taking up expensive room in data storage.

New data risks will grow and valuable data may be incomplete or missing from creative decisions and projects. You can’t manage data and risk without knowing what data you have.

AI/ML data discovery automation works kind of like a “time machine” to comprehensively identify, index, and manage unstructured and structured data that is living in your data stores. Clean data up and then keep it that way with continuous, automated data discovery, workflows, and monitoring.

You can see data discovery in action on your own data with a free 1 TB Test Drive of Anacomp’s D3 AI/ML Data Discovery Solution.

- Automate continuous data indexing, risk assessment, and monitoring of petabytes of both unstructured and structured data to manage over 950 file types

- Search and filter on actual file content, not just file attributes, to easily find hidden risks in file contents, such as PII

- Customize visualizations and workflows using user-friendly dashboards, risk filters, data tagging, metadata, and alerts

This article is an updated version of a story that appeared in Anacomp’s weekly Cybersecurity & Zero Trust Newsletter. Subscribe today to stay on top of all the latest industry news including cyberthreats and breaches, security stories and statistics, data privacy and compliance regulation, Zero Trust best practices, and insights from cyber expert and Anacomp Advisory Board member Chuck Brooks.

Anacomp has served the U.S. government, military, and Fortune 500 companies with data visibility, digital transformation, and OCR intelligent document processing projects for over 50 years.